Quality is Exciting, Automation is Boring. Holism and Atomicity are Crucial

Automation everywhere? On Accuracy and Correctness of Predictions

Expectations, or better: “expected results“, as we have them in functional testing, user stories, use cases or business cases, can only be applied for the checking of outcomes, where we have situations in which we know the answers to our questions. I.e., areas, where we use machines to make us faster. Arithmetics e.g., or simple business processes, where the human is underwhelmed and inaccurate and should be replaced by an automaton.

Automation, as it seems, is only applicable in boring fields of work.

More sensible use of digital machinery is found in predictive areas. Where we either e.g. literally want to predict the future (forecast) or understand risk (finance) or similar. Some parts of the software, however, are of predictive nature in most if not all IT solutions. It’s complicated, no, wait, complex.

Unfortunately, this is incompatible to standardized rules as wished by most administration.

There is no conveyor belt like work in the software industry.

But there is no way of knowing beforehand what a predictive instrument should deliver as an outcome, by definition. Expected results, but also our dear friends “Definition of Done“, “Acceptance Criteria“ and in the end also “User Story“ have no raison d’être in this whole area.

We need more quality – not medieval style, centralized regulation.

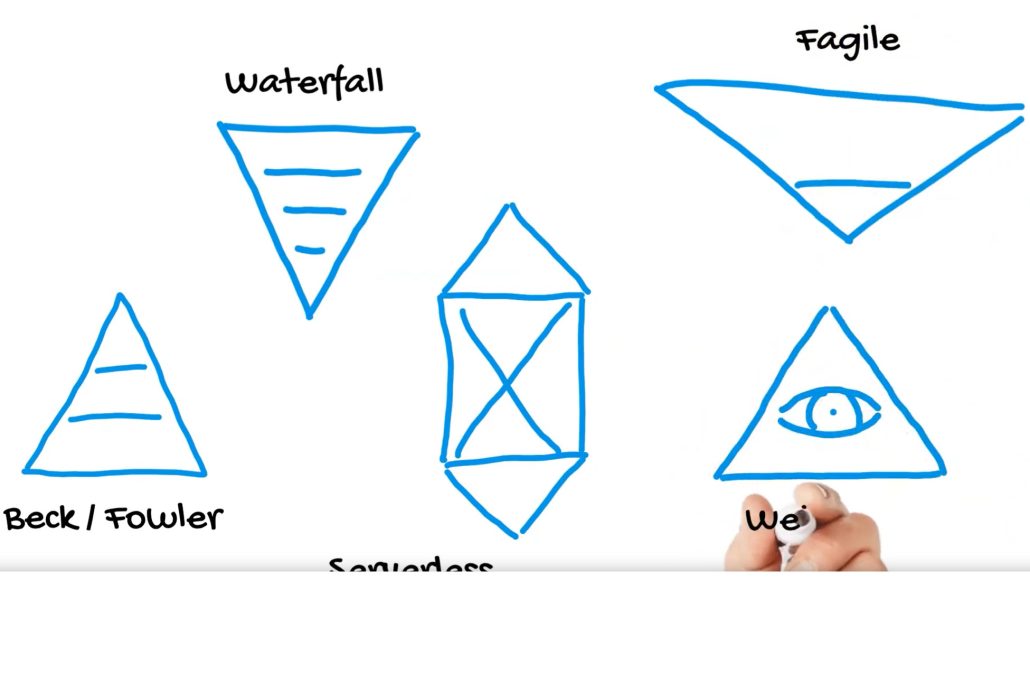

It is therefore of vital importance to have a solid ground, a way of not making mistakes on a base level. Atomic quality (Unit Testing) is the only way not to get combinations of complex systems producing inaccurate results in an unrecognizable way. The latter is leading to a world that accepts old and wrong results or bad practice as a reference and enables religious wars against good innovation because it is not understood. Like the legend of iron in spinach.

AI is no better.

This, of course, also holds true for machine learning, as there is no way of telling how one single weight or parameter influences the entire machine. The rising hype of “AI” called machine learning leads to even more people joining the “vernacular” programmers community. Quality is neglected all over, professional crafting is marginalized, even seen as unnecessary. If we ask data scientists about testing, the answer is „of course, we have training sets and test sets“ (-: . Expect to have more wrong statistics, fake news, crashing planes and missing target space missions.

Regression Testing is not a Save Space?

One popular attempt to alleviate the problem is to see if the built solution would have predicted the present if given the past as input. Experience as wisdom, which, in the end, also is what machine learning does. Experience, though, cannot predict the future.

Use case based functional testing without checking for outcome is not totally useless. It can reveal coverage statistics, bad exception handling and excess of runtime. But on the other hand, semi randomly generated tests can do that even better.

Yesterday’s weather.

Using the weather forecast as an example: The quality of the outcome increased over the years mainly by abstraction/extraction of better models out of the bare data and by putting sensors everywhere to get good data from microclimates in the first place. Not by having countless red regression tests in legacy software.

Commonly understood underlying models

The other crucial element for not making mistakes is speaking the same language and using a common domain to ensure the outcomes are not driven by misunderstandings and single interest drivers. Documentation is not good enough in this case. We need clear contracts.

So, now what?

Bottom line. There is no quality without unit testing and mutual theory building and good plausibility is reached with intuition and exploratory testing. It’s time to stop reading code and start to writing it better.

But I repeat myself.

Contact Information

Danilo Biella, Agile & Quality Professional

Unsere neusten Insights

Das könnte dich auch interessieren